Deploying to ECS

This guide provides a way to quickly get Spacelift up and running on an Elastic Compute Service (ECS) Fargate cluster. In this guide we show a relatively simple networking setup where Spacelift is accessible via a public load balancer, but you can adjust this as long as you meet the basic networking requirements for Spacelift.

To deploy Spacelift on ECS you need to take the following steps:

- Deploy your basic infrastructure components.

- Push the Spacelift images to your Elastic Container Registry.

- Deploy the Spacelift backend services using our ECS Terraform module.

Overview

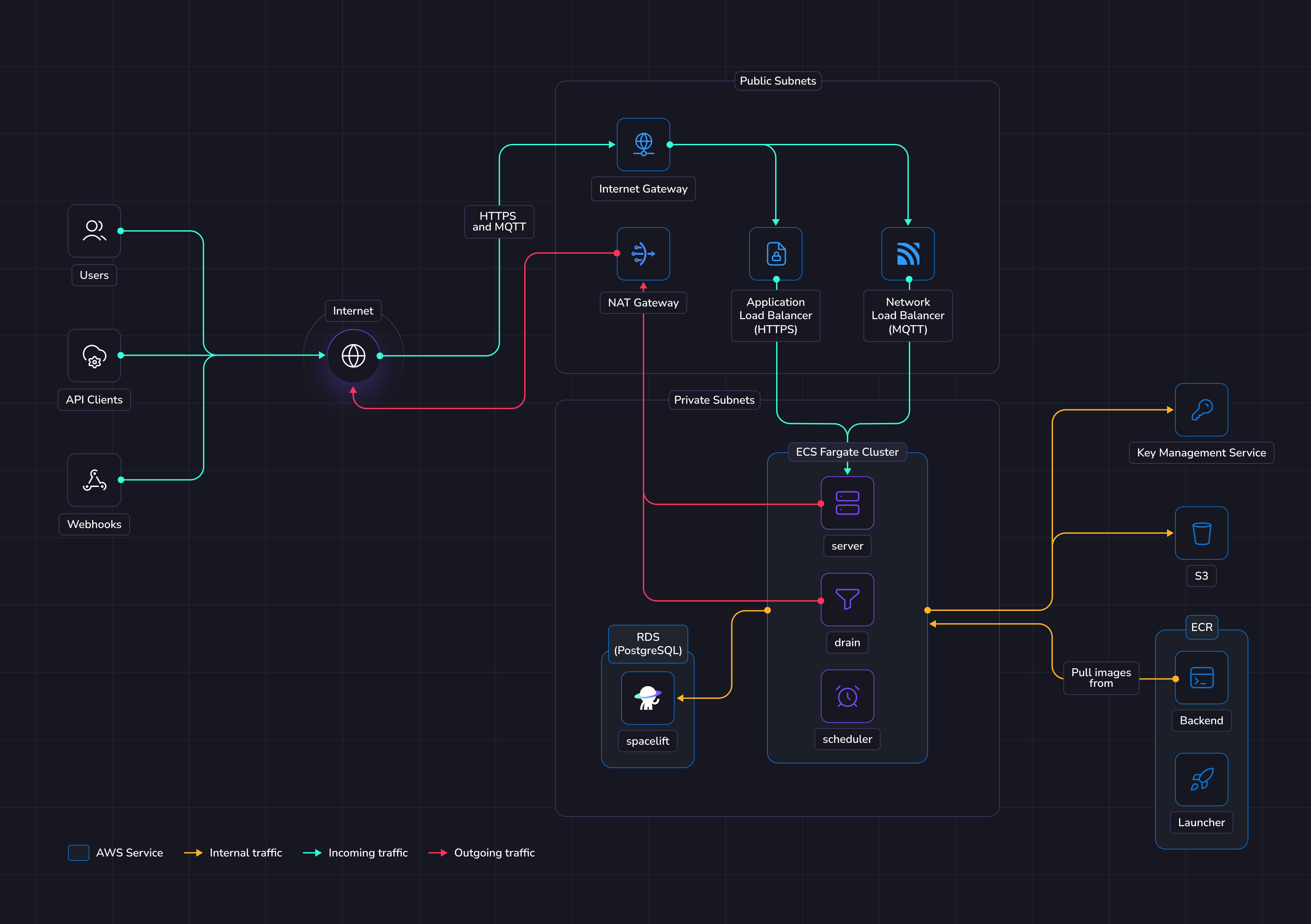

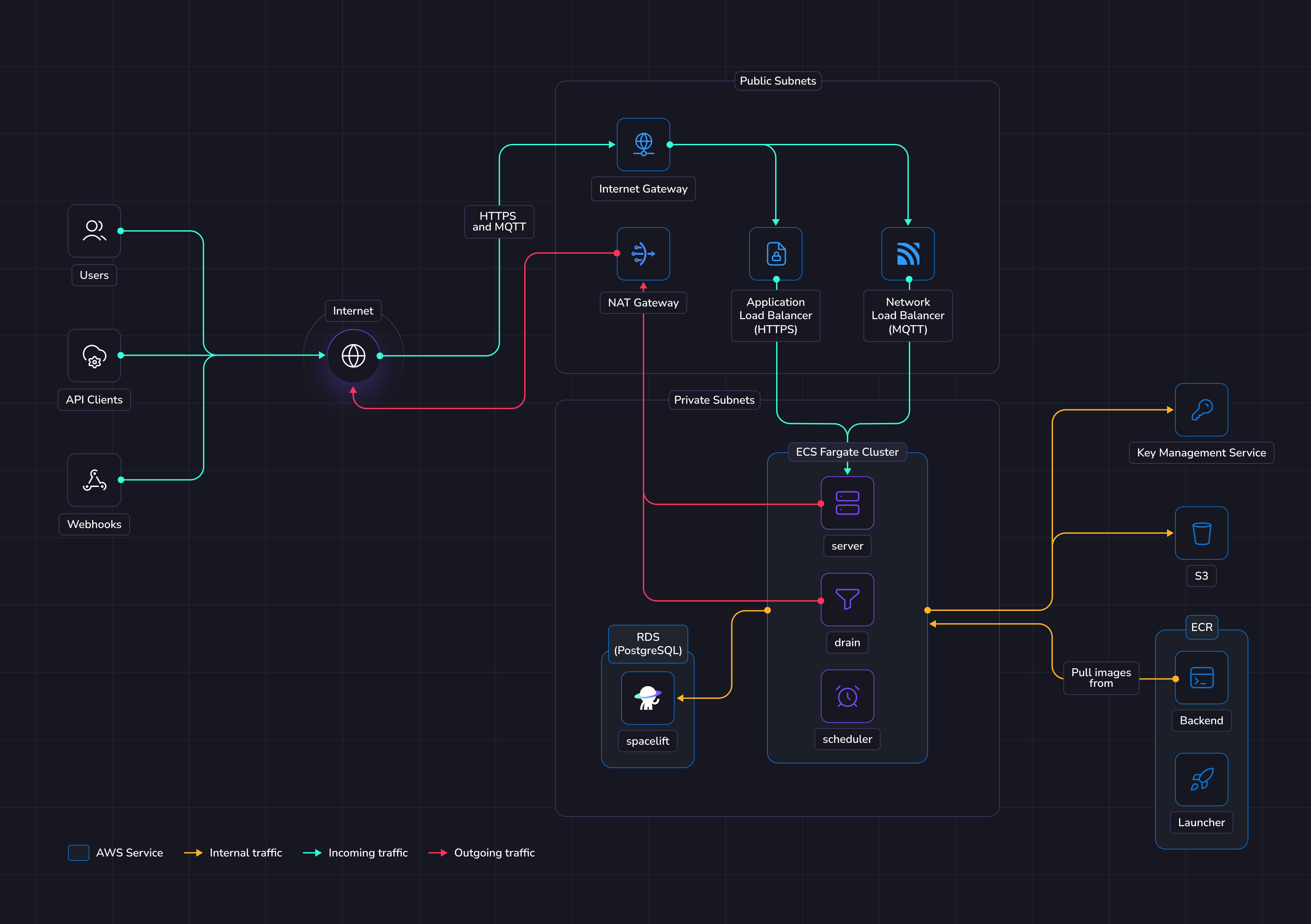

The illustration below shows what the infrastructure looks like when running Spacelift in ECS.

Networking

Info

More details regarding networking requirements for Spacelift can be found on this page.

This section will solely focus on how the ECS infrastructure will be configured to meet Spacelift's requirements.

In this guide we'll create a new VPC with public and private subnets. The public subnets will contain the following items to allow communication between Spacelift and the external internet:

The private subnets contain the Spacelift RDS Postgres database, along with the Spacelift ECS services and are not directly accessible via the internet.

Object Storage

The Spacelift instance needs an object storage backend to store Terraform state files, run logs, and other things.

Several S3 buckets will be created in this guide. This is a hard requirement for running Spacelift.

More details about object storage requirements for Spacelift can be found here.

Database

Spacelift requires a PostgreSQL database to operate. In this guide we'll create a new Aurora Serverless RDS instance.

You can also reuse an existing instance and create a new database in it. In that case you'll have to adjust the database URL and other settings across the guide.

It's also up to you to configure appropriate networking to expose this database to Spacelift's VPC.

You can switch the create_database option to false in the terraform module to disable creating an RDS instance.

More details about database requirements for Spacelift can be found here.

ECS

In this guide, we'll deploy a new ECS Fargate cluster to run Spacelift. The Spacelift application can be deployed using the terraform-aws-ecs-spacelift-selfhosted Terraform module.

The Terraform module will deploy the ECS cluster, associated resources like IAM roles, and the following Spacelift services:

- The scheduler.

- The drain.

- The server.

The scheduler is the component that handles recurring tasks. It creates new entries in a message queue when a new task needs to be performed.

The drain is an async background processing component that picks up items from the queue and processes events.

The server hosts the Spacelift GraphQL API, REST API and serves the embedded frontend assets. It also contains the MQTT server to handle interactions with workers. The server is exposed to the outside world using an Application Load Balancer for HTTP traffic, and a Network Load Balancer for MQTT traffic.

Workers

In this guide Spacelift workers will be deployed as an EC2 autoscaling group, using the terraform-aws-spacelift-workerpool-on-ec2 Terraform module.

Requirements

Before proceeding with the next steps, the following tools must be installed on your computer.

Info

In the following sections of the guide, OpenTofu will be used to deploy the infrastructure needed for Spacelift. If you are using Terraform, simply swap tofu for terraform.

Server certificate

To be able to reach your Spacelift installation using HTTPS, we need a valid certificate to serve it under secure endpoints.

Before jumping into deploying Spacelift, we first need to create a valid ACM certificate.

We are going to use DNS validation to make sure the issued certificate is valid, so you need to have access to

your DNS zone to add to it the ACM certificate validation CNAME entries.

The Spacelift infrastructure module will take as an input an ACM certificate ARN.

You can either create one yourself manually or use the OpenTofu code snippets below to help you create one.

Deploy infrastructure

We provide Terraform modules to deploy Spacelift's infrastructure requirements as well as a module to deploy the Spacelift services to ECS.

Some parts of these modules can be customized to avoid deploying parts of the infra in case you want to handle that yourself.

For example, you may want to disable the database if you already have a Postgres instance and want to reuse it, or you may want to provide your own IAM roles or enable CloudWatch logging.

Before you start, set a few environment variables that will be used by the Spacelift modules:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31 | # Set this to the AWS region you wish to deploy Spacelift to, e.g. "eu-west-1".

export TF_VAR_aws_region=<aws-region>

# Set this to the domain name you want to access Spacelift from, e.g. "spacelift.example.com".

export TF_VAR_server_domain=<server-domain>

# Set this to the domain name you want to use for MQTT communication with the workers, e.g. "mqtt.spacelift.example.com".

export TF_VAR_mqtt_domain=<mqtt-domain>

# Set this to the username for the admin account to use during setup.

export TF_VAR_admin_username=<admin-username>

# Set this to the password for that account.

export TF_VAR_admin_password=<admin-password>

# Extract this from your archive: self-hosted-v3.0.0.tar.gz

export TF_VAR_spacelift_version=v3.0.0

# Set this to the token given to you by your Spacelift representative.

export TF_VAR_license_token=<license-token>

# If you want to pass your own ACM certificate to use, uncomment the following variable and set it

# to the ARN of the certificate you want to use. Please note, the ACM certificate must be successfully

# issued, otherwise deploying the services will fail.

#

# ℹ️ If you have configured your certificate using OpenTofu or Terraform in the previous step, you can just ignore this

# variable since you might want to reference the aws_acm_certificate_validation resource directly.

export TF_VAR_server_certificate_arn=""

# If you want to automatically send usage data to Spacelift, uncomment the following variable.

#export TF_VAR_enable_automatic_usage_data_reporting="true"

|

Note

The admin login/password combination is only used for the very first login to the Spacelift instance. It can be removed after the initial setup. More information can be found in the initial setup section.

Below is an example of how to use these modules:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186 | variable "aws_region" {

type = string

description = "The AWS region you want to install Spacelift into."

default = "eu-north-1"

}

variable "server_domain" {

type = string

description = "The domain name you want to use for your Spacelift instance (e.g. spacelift.example.com)."

}

variable "mqtt_domain" {

type = string

description = "The domain name you want to use for your Spacelift instance (e.g. spacelift.example.com)."

}

variable "admin_username" {

type = string

description = "The admin username for initial setup purposes."

}

variable "admin_password" {

type = string

description = "The admin password for initial setup purposes."

}

variable "spacelift_version" {

type = string

description = "The version of Spacelift being deployed. This is used to decide what ECR image tag to use."

}

variable "license_token" {

type = string

description = "The license token for selfhosted, issued by Spacelift."

}

variable "server_certificate_arn" {

type = string

description = "The ARN of the certificate to use for the Spacelift server."

}

variable "deploy_services" {

type = bool

description = "Whether to deploy the Spacelift ECS services or not."

default = false

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = var.aws_region

default_tags {

tags = {

"app" = "spacelift-selfhosted"

}

}

}

# Deploy the basic infrastructure needed for Spacelift to function.

module "spacelift-infra" {

source = "github.com/spacelift-io/terraform-aws-spacelift-selfhosted?ref=v1.3.1"

region = var.aws_region

rds_instance_configuration = {

"primary" : {

instance_identifier : "primary"

instance_class : "db.serverless"

}

}

rds_serverlessv2_scaling_configuration = {

min_capacity = 0.5

max_capacity = 5.0

}

website_endpoint = "https://${var.server_domain}"

}

# Deploy the ECS services that run Spacelift.

module "spacelift-services" {

count = var.deploy_services ? 1 : 0

source = "github.com/spacelift-io/terraform-aws-ecs-spacelift-selfhosted?ref=v1.1.0"

region = var.aws_region

unique_suffix = module.spacelift-infra.unique_suffix

server_domain = var.server_domain

# NOTE: there's nothing special about port number 1984. Your workers just need to be able to access this port

# on the Spacelift server.

mqtt_broker_endpoint = "tls://${var.mqtt_domain}:1984"

vpc_id = module.spacelift-infra.vpc_id

server_lb_subnets = module.spacelift-infra.public_subnet_ids

# It's also possible to reference the certificate resource ARN directly from the previous step.

# That could be useful if you want to keep a single terraform codebase for both certificate provisioning and the

# spacelift installation.

# You can uncomment the line below and get rid of the var.server_certificate_arn variable if you want to do so.

#server_lb_certificate_arn = aws_acm_certificate_validation.server-certificate.certificate_arn

server_lb_certificate_arn = var.server_certificate_arn

server_security_group_id = module.spacelift-infra.server_security_group_id

mqtt_lb_subnets = module.spacelift-infra.public_subnet_ids

ecs_subnets = module.spacelift-infra.private_subnet_ids

admin_username = var.admin_username

admin_password = var.admin_password

backend_image = module.spacelift-infra.ecr_backend_repository_url

backend_image_tag = var.spacelift_version

launcher_image = module.spacelift-infra.ecr_launcher_repository_url

launcher_image_tag = var.spacelift_version

license_token = var.license_token

database_url = module.spacelift-infra.database_url

database_read_only_url = module.spacelift-infra.database_read_only_url

deliveries_bucket_name = module.spacelift-infra.deliveries_bucket_name

large_queue_messages_bucket_name = module.spacelift-infra.large_queue_messages_bucket_name

metadata_bucket_name = module.spacelift-infra.metadata_bucket_name

modules_bucket_name = module.spacelift-infra.modules_bucket_name

policy_inputs_bucket_name = module.spacelift-infra.policy_inputs_bucket_name

run_logs_bucket_name = module.spacelift-infra.run_logs_bucket_name

states_bucket_name = module.spacelift-infra.states_bucket_name

uploads_bucket_name = module.spacelift-infra.uploads_bucket_name

uploads_bucket_url = module.spacelift-infra.uploads_bucket_url

user_uploaded_workspaces_bucket_name = module.spacelift-infra.user_uploaded_workspaces_bucket_name

workspace_bucket_name = module.spacelift-infra.workspace_bucket_name

kms_encryption_key_arn = module.spacelift-infra.kms_encryption_key_arn

kms_signing_key_arn = module.spacelift-infra.kms_signing_key_arn

kms_key_arn = module.spacelift-infra.kms_key_arn

drain_security_group_id = module.spacelift-infra.drain_security_group_id

scheduler_security_group_id = module.spacelift-infra.scheduler_security_group_id

server_log_configuration = {

logDriver : "awslogs",

options : {

"awslogs-region" : var.aws_region,

"awslogs-group" : "/ecs/spacelift-server",

"awslogs-create-group" : "true",

"awslogs-stream-prefix" : "server"

}

}

drain_log_configuration = {

logDriver : "awslogs",

options : {

"awslogs-region" : var.aws_region,

"awslogs-group" : "/ecs/spacelift-drain",

"awslogs-create-group" : "true",

"awslogs-stream-prefix" : "drain"

}

}

scheduler_log_configuration = {

logDriver : "awslogs",

options : {

"awslogs-region" : var.aws_region,

"awslogs-group" : "/ecs/spacelift-scheduler",

"awslogs-create-group" : "true",

"awslogs-stream-prefix" : "scheduler"

}

}

}

output "server_lb_dns_name" {

value = var.deploy_services ? module.spacelift-services[0].server_lb_dns_name : ""

}

output "mqtt_lb_dns_name" {

value = var.deploy_services ? module.spacelift-services[0].mqtt_lb_dns_name : ""

}

output "shell" {

value = module.spacelift-infra.shell

}

output "tfvars" {

value = module.spacelift-infra.tfvars

sensitive = true

}

|

Feel free to take a look at the documentation for the terraform-aws-ecs-spacelift-selfhosted module before applying your infrastructure in case there are any settings that you wish to adjust. Once you are ready, apply your changes:

Once applied, you should grab all variables that need to be exported in the shell that will be used in next steps. We expose a shell output in terraform that you can source directly for convenience.

| # Source in your shell all the required env vars to continue the installation process

$(tofu output -raw shell)

# Output the required tfvars that will be used in further applies. Note that the ".auto.tfvars"

# filename is being used to allow the variables to be automatically loaded by OpenTofu.

tofu output -raw tfvars > spacelift.auto.tfvars

|

Info

During this guide you'll export shell variables that will be useful in future steps. So please keep the same shell open for the entire guide.

Push images to Elastic Container Registry

Assuming you have sourced the shell output as described in the previous section, you can run the following commands to upload the container images to your container registries and the launcher binary to the binaries S3 bucket:

1

2

3

4

5

6

7

8

9

10

11

12

13

14 | # Login to the private ECR

aws ecr get-login-password --region "${AWS_REGION}" | docker login --username AWS --password-stdin "${PRIVATE_ECR_LOGIN_URL}"

tar -xzf self-hosted-${TF_VAR_spacelift_version}.tar.gz -C .

docker image load --input="self-hosted-${TF_VAR_spacelift_version}/container-images/spacelift-launcher.tar"

docker tag "spacelift-launcher:${TF_VAR_spacelift_version}" "${LAUNCHER_IMAGE}:${TF_VAR_spacelift_version}"

docker push "${LAUNCHER_IMAGE}:${TF_VAR_spacelift_version}"

docker image load --input="self-hosted-${TF_VAR_spacelift_version}/container-images/spacelift-backend.tar"

docker tag "spacelift-backend:${TF_VAR_spacelift_version}" "${BACKEND_IMAGE}:${TF_VAR_spacelift_version}"

docker push "${BACKEND_IMAGE}:${TF_VAR_spacelift_version}"

aws s3 cp --no-guess-mime-type "./self-hosted-${TF_VAR_spacelift_version}/bin/spacelift-launcher" "s3://${BINARIES_BUCKET_NAME}/spacelift-launcher"

|

Deploy Spacelift

In this section, we'll deploy the Spacelift services to your ECS cluster, and then deploy an initial worker pool.

Deploy application

To deploy the services, set the following environment variable to enable the services module to be deployed:

| export TF_VAR_deploy_services="true"

|

Now go ahead and run tofu apply again to deploy the ECS cluster and services.

Once this module has been applied successfully, you should be able to setup DNS entries for the server and MQTT broker endpoints using the server_lb_dns_name and mqtt_lb_dns_name outputs.

You need to set two CNAMEs in your DNS zone.

One for the main application and one for the MQTT endpoint for the workers.

Next steps

Now that your Spacelift installation is up and running, take a look at the initial installation section for the next steps to take.

Create a worker pool

This section will show you how to deploy an EC2 based worker using our terraform-aws-spacelift-workerpool-on-ec2 module.

The first step is to follow the instructions in our worker pool documentation to generate credentials for your worker pool, and to create a new pool in Spacelift.

Once you have a private key and token for your worker pool, set the following Terraform variables:

| # The ID of your worker pool, for example 01JPA4M2M7MCYF8JZBS4447JPA. You can get this from the

# worker pool page in Spacelift.

export TF_VAR_worker_pool_id="<worker-pool-id>"

# The token for your worker pool, downloaded from Spacelift when creating the pool.

export TF_VAR_worker_pool_config="<worker-pool-token>"

# The base64-encoded private key for your worker pool. Please refer to the worker

# pool documentation for commands to use to base64-encode the key.

export TF_VAR_worker_pool_private_key="<worker-pool-private-key>"

|

Next, you can use the following code to deploy your worker pool. Note that this deploys the workers into the same VPC as Spacelift, but this is not required for the workers to function. Please refer to the worker network requirements for more details if you wish to adjust this.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68 | variable "vpc_id" {

type = string

description = "The VPC Spacelift is installed into."

}

variable "availability_zones" {

type = list(string)

description = "The availability zones to deploy workers to."

}

variable "private_subnet_ids" {

type = list(string)

description = "The subnets to use for the workers."

}

variable "aws_region" {

type = string

description = "AWS region to deploy resources."

}

variable "worker_pool_id" {

type = string

description = "The ID of the worker pool."

}

variable "worker_pool_config" {

type = string

description = "The worker pool configuration to use."

}

variable "worker_pool_private_key" {

type = string

description = "The worker pool private key."

}

variable "binaries_bucket_name" {

type = string

description = "The URI to the launcher binary in S3."

}

locals {

launcher_s3_uri = "s3://${var.binaries_bucket_name}/spacelift-launcher"

}

data "aws_security_group" "default" {

name = "default"

vpc_id = var.vpc_id

}

module "default-pool" {

source = "github.com/spacelift-io/terraform-aws-spacelift-workerpool-on-ec2?ref=v2.15.0"

configuration = <<-EOT

export SPACELIFT_TOKEN="${var.worker_pool_config}"

export SPACELIFT_POOL_PRIVATE_KEY="${var.worker_pool_private_key}"

EOT

min_size = 2

max_size = 2

worker_pool_id = var.worker_pool_id

security_groups = [data.aws_security_group.default.id]

vpc_subnets = var.private_subnet_ids

enable_autoscaling = false

selfhosted_configuration = {

s3_uri = local.launcher_s3_uri

}

}

|

Deletion / uninstall

Before running tofu destroy on the infrastructure, you may want to set the following properties for the terraform-aws-spacelift-selfhosted module to allow the RDS, ECR and S3 resources to be cleaned up properly:

| module "spacelift" {

source = "github.com/spacelift-io/terraform-aws-spacelift-selfhosted?ref=v1.3.1"

# Other settings...

# Disable delete protection for RDS and S3 to allow resources to be cleaned up

rds_delete_protection_enabled = false

s3_retain_on_destroy = false

ecr_force_delete = true

}

|

Remember to apply those changes before running tofu destroy.